Rise of AI deepfakes sparks concerns over digital blackface

AI-driven digital blackface is surging in the U.S., with deepfakes and stereotypes spreading via social media and politics, raising alarms over racism and disinformation.

Rise of AI deepfakes sparks concerns over digital blackface

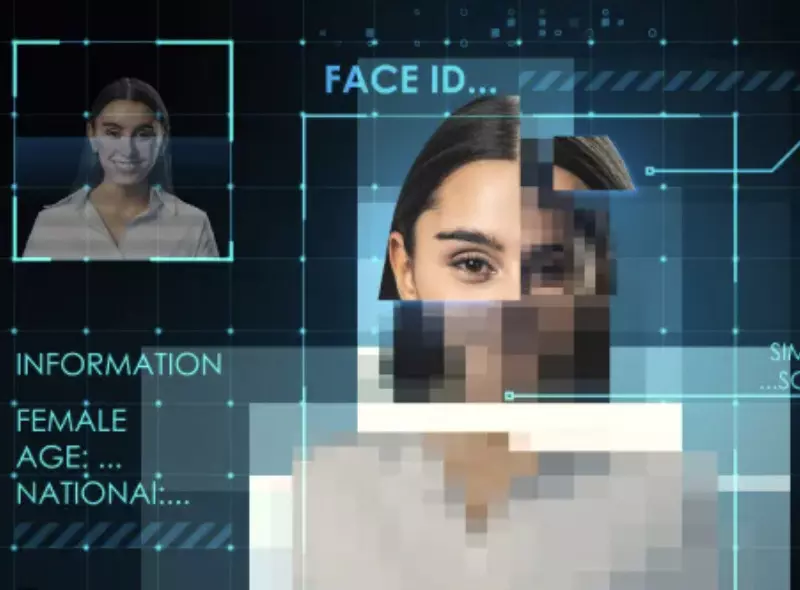

AI-generated videos and images mimicking Black identities are spreading rapidly across social media and political discourse, raising concerns about racism, disinformation, and state-backed manipulation of reality in the U.S.

The rise of generative artificial intelligence and the return of Donald Trump to the White House have fueled a sharp increase in digital blackface—the use of fabricated or exaggerated Black identities online to reinforce racist stereotypes, spread misinformation, or score political points.

From viral AI-generated TikTok videos falsely portraying Black Americans abusing food stamp benefits, to doctored images circulated by official government accounts, scholars and civil rights advocates warn that the phenomenon has entered a more dangerous phase. What once existed largely as online cultural appropriation has, they argue, evolved into a tool of mass disinformation.

Late in 2025, as U.S. government shutdowns disrupted SNAP benefits, AI-generated videos depicting Black women boasting about welfare fraud spread rapidly online. Despite visible AI watermarks, the clips were amplified by right-wing commentators and even briefly treated as authentic by outlets such as Fox News, before corrections were issued. Critics note that such narratives obscure basic facts, including that white Americans remain the largest group of SNAP recipients.

According to Safiya Umoja Noble, author of Algorithms of Oppression, generative AI has accelerated the recycling of centuries-old racist tropes. “The state is bending reality to fit its imperatives,” she said, warning that AI systems trained on biased data can be weaponised by those in power.

The term digital blackface, first coined in academic literature in 2006, refers to non-Black users adopting Black speech, imagery, or personas online without cultural accountability. While earlier forms included reaction GIFs or slang use, AI tools have enabled hyperrealistic deepfakes that blur the line between satire, propaganda, and harassment.

One flashpoint involved AI-generated videos of Martin Luther King Jr., produced using tools such as OpenAI’s video models. The clips, which falsely depicted King in criminal or inflammatory scenarios, drew condemnation from his family and reignited debate over “synthetic resurrection.” Similar misuse has targeted cultural figures like Beyoncé, whose likeness is frequently deployed in memes detached from context or consent.

The Trump administration itself has been accused of amplifying such tactics. Earlier this year, an altered image of Minnesota activist Nekima Levy Armstrong was shared by the official White House X account, while racially offensive imagery involving the Obamas circulated on Trump-linked platforms—moves critics describe as state-enabled digital blackface.

Technology companies have taken limited steps to curb abuses, including restricting deepfakes of certain public figures and removing controversial AI-generated characters. However, advocacy groups argue enforcement remains inconsistent and reactive, while content moderation struggles to keep pace with AI output volumes.

Despite the grim outlook, some researchers believe the current surge may fade as public awareness grows and social norms shift. Still, many warn that without stronger safeguards, AI-driven digital blackface will continue to expose Black users to harassment and distort public discourse.

As Noble puts it, when governments and platforms align, “finding material to support a political agenda becomes easy—especially when reality itself can be rewritten.”